The bug in the budgeting process

In my preview post, I mentioned  that removing artificial batch limits has caused us to double our performance. But what are those artificial batch limits?

that removing artificial batch limits has caused us to double our performance. But what are those artificial batch limits?

Well, anything that doesn’t involve actual system resources. For example, limit batch size by time or by document count is artificial. We used to have to do that as a correlation to the amount of managed memory we use, and because it allowed us to parallelize I/O and computation work. Now, each index is actually working on its own, so if one index is stalling because it need to fetch data, other indexes will use the available core, and every one will be happy.

Effectively, an indexing batch stopped being a global database event that we had to fetch data for specifically and became something much smaller. That fact alone gave us leeway to remove drastic amounts of code to handle things like prefetching, I/O / memory / time / CPU balancing and a whole bunch of really crazy stuff that we had to do.

So all of that went away, and we learned that anything that would artificially reduce a batch size is bad, that we should make the batch size as big as possible to benefit from economy of scale effects.

But wait, what about non artificial limits? For example, running an indexing batch take some memory. We can now track it much better, and most of it is in unmanaged memory anyway, so we don’t worry about keeping it around for a long time. We do worry about running out of it, though.

If we have six indexes all running at the same time, each trying to use as much of the system resources as it possible could. Of course, if we actually let them to that they would allocate enough memory to push us into the page file, resulting in all our beautiful code spending all its time just paging in and out from disk, and our performance looking like it was hit in the face repeatedly with the hard disk needle.

So we have a budget. In fact, we have a pretty complete heuristics system in place.

- Start by giving each index 16 MB to run.

- Whenever the index exceed that budget, allow it to complete the current operation (typically a single document, so pretty small)

- Check if there is enough memory available* that we can still use, and if so, increase the budget by another 16 MB

* Enough memory available is actually a really complex idea, enough so that I’ll dedicate the next post to it.

So that leads us to all indexes competing with one another to get more memory, until we hit the predefined limit (which is supposed to allow us memory to do other work as well). At that point, we hit a real limit, and we stop the batch, complete our work and carry on. After the batch is completed, we could release all of that memory and start from scratch, but that would probably be a waste, we already know that we haven’t gone too badly over budget, so why release all that precious memory just to immediately require it again?

So that is what we did, and we run our benchmarks again. And the performance was not nice to us.

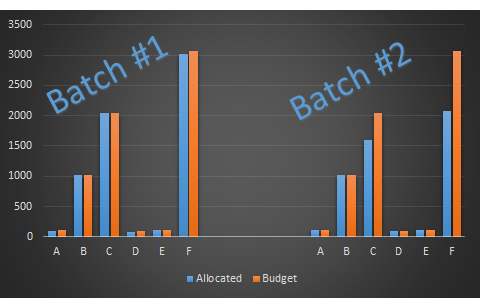

It took a while to figure out what happened, but you can see this on the following graph.

We started allocating memory, and as you can see, we have some indexes that have high memory requirement. At some point, we have hit the memory ceiling we specified, and started completing batches so we won’t use too much memory.

All well and good. Except that the act of completing the batch will also (sometimes) release memory. This is typically done because we have found the ideal sizes we need for processing, so we discard everything that is too small. But the allocator is free to release memory if it thinks that this is the best for the system.

Unfortunately, we didn’t adjust the budget in this case. Consider the case of indexes C & F, both of which released significant amount of memory after the batch was completed. Index B, which was forced to make do with whatever memory it managed to grab, suddenly finds itself in a position to grab more memory, and it will slowly increase its budgets and allocations.

At the same time, indexes C & F are also going to allocate more memory, after all, they are well within their budget, since we didn’t account for the released memory that was gobbled up by index B. The fact that this starts happening only about 45 minutes into the batch, and it actually shows up as higher memory utilization about 4 hours after that is really quite annoying when you need to debug it.

Comments

What would be the benefit for an indexing job to use that much memory? Isn't the indexing job IO bound after all?

Pop Catalin, Actually, they aren't now, we are running through them much faster now :-)

As I understood this is not shared memory that indexing jobs allocate, but private memory, in which case it's not clear what's the benefit allocating this much memory unless Raven 4.0 forces a write at the end of the batch instead of buffering the data to be written.

Pop Catalin, The actual problem was that we would do just that, we would batch all the small updates and then flush them after the map phase. But in order to figure that out we also had to figure out how to make them avoid fighting each other for memory

Comment preview