The nine hours micro benchmark

We’ve been running all sort of benchmarks on RavenDB, ranging from micro optimizations to a single heavily used routine that focused on re-ordering the instructions so the CPU can execute them in parallel to large scale load testing and data processing.

This tale is about the later. We have defined 6 indexes over a data set of over 18 million documents with a total size of 57GB. We then run it on a system that had 16GB of RAM (and typically had a lot less actually available).

When we started, we very quickly run out of memory, in fact, our allocations exceeded the database size (so we allocated over 60 GB) before we decided to focus on fixing that. The problem was the scope of the batch, it was too long, and we didn’t reuse the memory inside the same batch. Once that was fixed, we were able to run the performance test successfully.

Total time to run it? Over 9 hours. Granted, this is talking about 6 indexes each needing to go over the entire dataset, so we expect it to take a while, and it is much faster than in previous versions, but that is still far too much.

I should probably explain that the whole point of doing something like that is to see the interference effects. What happen when you have a bunch of competing indexes over the same resources (CPU, memory, disk)?

Turn out, there is a lot going there, and you can’t really get good profiling results from this kind of run (to start with, the profiling overhead would push this into a multi day effort), and while we captured some profiling run of shorter stats, we weren’t really able to pinpoint the blame.

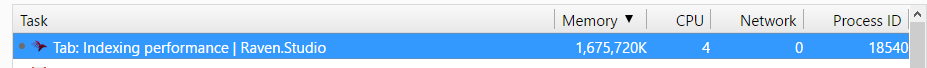

So we added the tooling we needed to figure it out, and then put that in the studio. The end result was… not so good.

Yes, that is the studio trying to display the debug information for that benchmark. It… did not go so well for us. In other words, before we could fix the benchmark, we had to optimize the code that would tell us where we are spending all that time in the benchmark ![]() .

.

As it turned out, the problem was that our code was optimizing for problems we no loner had. Basically, in RavenDB 3.x we have to take into account our memory usage, and pretty much all of it is managed memory. That lead to some interesting problems, to whit, we don’t know how much memory we use. The fact that a document is size so and so when serialized to disk means exactly squat with regards to how much it is actually going to take in memory. And because too much allocations lead to higher GC costs down the road, we put term limits on the size of the indexing batches we’ll process.

Effectively, we will take a certain amount of documents to process in a batch, and then complete the batch. All the memory we used in the batch will go away, and hopefully we didn’t push too much of it into higher generations. But the real costs that we have in RavenDB 4.x are very different. To start with, we use a lot less managed operations, and we use Voron for pretty much everything. That means that our costs has now shifted, instead of worrying about releasing the memory as quickly as possible, we need to worry about reducing the number of times we go to disk.

As it turns out, artificially reducing the batch size results in us processing more batches, which require us to hit the disk a lot more. The same logic applies to RavenDB 3.x (and we have users who have configured RavenDB to have very long batches for exactly that reason), but that come at GC cost that simply does not exist in RavenDB 4.0.

The immediate solution was to remove all the batch limits and see what would happen. Overall performance had doubled. We were able to process the same amount of information in about half the time. And that is before we did deep dive with a profiler to seek inefficiencies.

Comments

Comment preview